Uncategorized

-

New paper published in Topics in Cognitive Science, reviewing the literature on the neurobiology of language in the aging brain (PDF available here). “Language is perhaps the most complex and sophisticated of cognitive faculties in humans. The neurobiological basis of language in the healthy, aging brain remains a relatively neglected topic, in particular with respect…

-

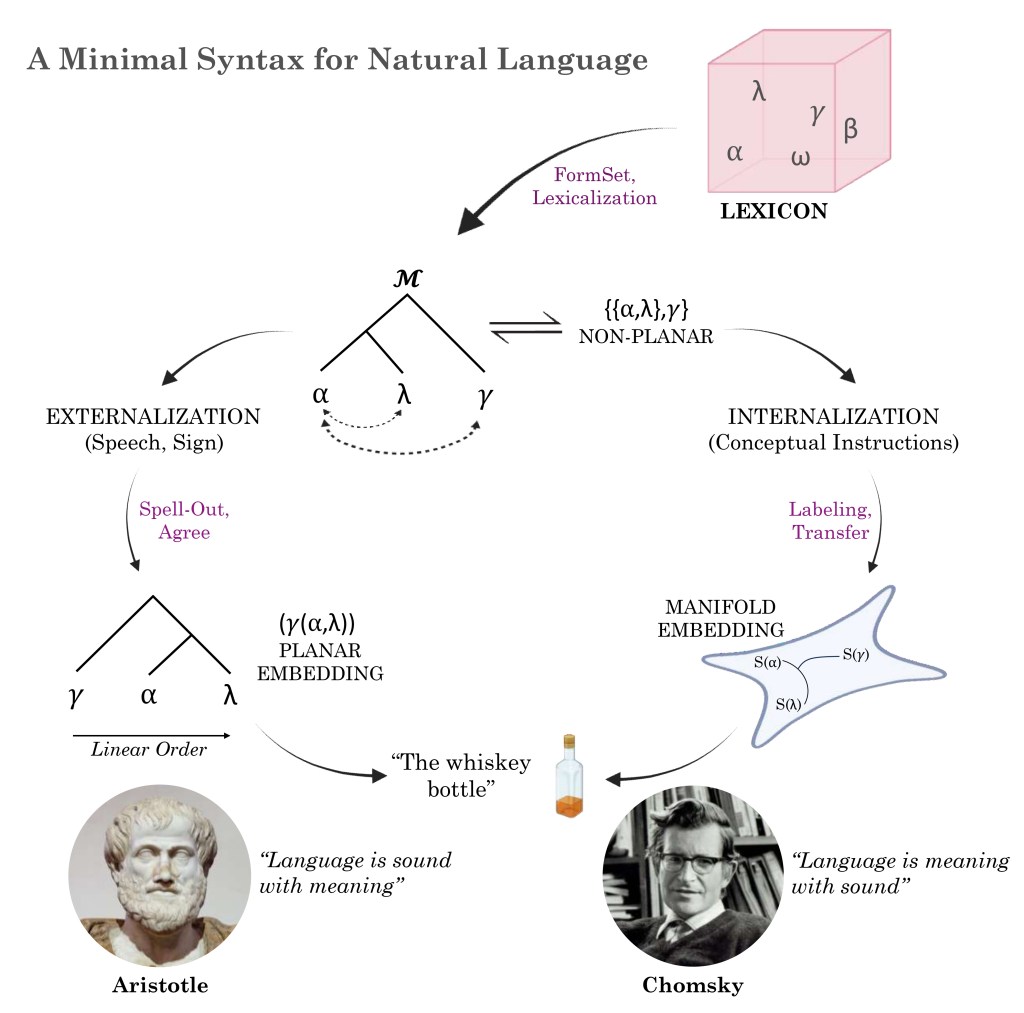

New paper published in Cognitive Neuroscience on the neural code for natural language syntax (PDF here). This paper explores what neural mechanisms can potentially satisfy the demands of the free non-associative commutative magma emerging from contemporary formulations of a Merge-based syntax. These are proposed to be a delimited set of neural signatures that are hypothesized…

-

New chapter published in the volume Biolinguistics at the Cutting Edge that reviews the foundations of linguistic computation and meaning [PDF]. A paper comparing compositional syntax-semantics in DALL-E 2/3 and young children. Lastly, a pre-print in which we assess the compositional linguistic abilities of OpenAI’s o3-mini-high large reasoning model.

-

In this post, I wanted to outline and compress all of my academic research into a streamlined format. My research has focused mostly on compositionality in formal systems, neural systems, and artificial systems, with common themes being the nature, acquisition, evolution, and implementation of higher-order structure-building in the human mind/brain. In 2024, I developed a…

-

My conversation with Tim and Keith at the MLST podcast is now live. We spoke about semantics, philosophy of mind, Large Language Models, AI ethics, evolution, metaphysics, and the end of the world.

-

Psycholinguistic theory has traditionally pointed to the importance of explicit symbol manipulation in humans, to explain our unique facility for symbolic forms of intelligence such as language and mathematics. A recent report utilizing intracranial recordings in a cohort of three participants argues that language areas in the human brain rely on a continuous vectorial embedding…

-

A three hour break-down of a paper published in Journal of Neurolinguistics, providing some background and context, reviewing the paper section-by-section. Paper link Video link A comprehensive neural model of language must accommodate four components: representations, operations, structures and encoding. Recent intracranial research has begun to map out the feature space associated with syntactic processes,…